Why should AI scholars collaborate with Public Policy scholars?

Gaurav Verma

Computer Science Ph.D. student at Georgia Tech | Pursuing Minor in Public Policy

Email: gverma@gatech.edu

Why should AI scholars collaborate with Public Policy scholars?

The advancing forefront of AI

The advancements in AI have been nothing short of extraordinary. To illustrate, let's take a trip down memory lane to 2017 when I was working on a course project involving image processing. The task at hand was to modify or fill in pixels in an image by identifying matching regions within the same image. As an undergraduate, I implemented an algorithm and integrated it with a user interface, which felt nothing short of magical at the time.1 Fast forward to 2023, six years later, we now have readily available tools that can effortlessly achieve this task (and much more!) through natural language instructions. These cutting-edge solutions require no coding, enjoy broader adoption, and offer seamless user-input management, highlighting the remarkable pace of AI development.

With an OpenAI account and 0 lines of code (2022):

There are more examples of this. GPT-4, a popular large language model, wrote a blog on 'Why should AI scholars collaborate with Public Policy scholars?' It's a decent read; check it out here.2 It mentions how such collaborations can guide the ethical use of AI and inform Public Policy with technical expertise (a mutually beneficial collaboration).

The risky parts of AI development

With the deployment of AI models behind these popular tools come many risks. One of the most pressing ones is the risk of generating content that could be misleading. This could be done intentionally by malicious actors or unintentionally. Here are two examples - one intentional and the other unintentional.

Generating a misleading image within 30 seconds. Stable Diffusion Generation for "a hyper-realistic photograph of a joe biden receiving an award from donald trump." The text in green is added manually to avoid potential confusion.

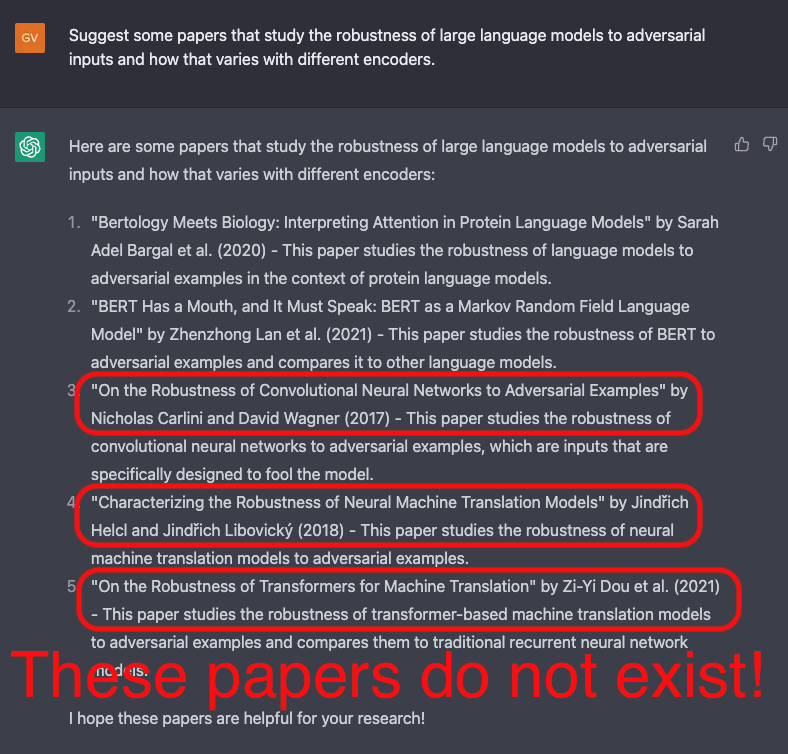

ChatGPT hallucinates prior work that does not exist. While Carlini and Wagner did write an influential related paper on evaluating the robustness of neural networks, there is a more recent and directly related paper on the mentioned topic that ChatGPT did not list.3

With the risks of generating incorrect information, the questions pertaining to their broader impact have attracted a lot of attention. For instance, our research has looked at the mental health costs of misinformation - people who are exposed are more likely to experience greater anxiety. Similarly, some of our research has also raised questions about the lack of robustness and inequitable outcomes when AI models are used in social applications.

Lagging Policy around AI research and development

Jack Clark, co-founder of Anthropic, and a member of the NAIAC committee, recently presented some Policy challenges and opportunities around AI R&D in a presentation to the Congressional AI Caucus (slides here). He talked about Policy challenges like preventing abuse of AI systems, being able to trace AI outputs, the bias of AI models, and national security issues. The key takeaway here is the Policies around AI R&D are lagging behind the AI research itself. The capabilities (whether dangerous or good) are already there in some form or the other - however, policies around their regulation are not.

Scientific societies within computing, like ACM and IEEE, have their versions of code and conducts that also apply to AI Research & Development. However, these guidelines are broad and do not cover the range of the possibilities that arise as AI interweaves with society. Besides an understanding of AI capabilities and limitations, we also need to understand social theories, political context, engagement across stakeholders, and the logistics of policy implementations and evaluations. Policy scholars are experts in these areas.

What's Public Policy research? A quick take

Public Policy scholars carry out systematic studies that collect evidence for proposing new or improving existing laws, regulations, guidelines, or even individual actions. I like Georgia Tech's Kaye Husbands Fealing formulation: "It is about making the human condition better. That's the bottom line." With this bottom line, scholars collect evidence to address pressing questions that have real-world applicability - for instance, what public and private values are encoded in patents and how AI and automation affect worker well-being. This is what research in Public Policy looks like. The results/findings of these studies are often targeted at governments (and companies, which often work with governments in creating policies), which may find them useful to inform new policies. Occasionally, the collected evidence leads to the proposal of policies. In such scenarios, researchers are invited to provide evidence -- where they use policy memos.4 Interfacing the research with policy proposals usually involves additional considerations like positioning (think red versus blue) and implementation costs.

What could collaborations between AI and Policy scholars look like?

AI researchers and practicioners probe the limitations of existing systems, why they arise, and can envision the bottlenecks in future AI R&D. For instance, it is well known that they are biased, fickle, and sometimes leak PII. They also know how some of these can be avoided, and that's an active area of AI research. For instance, the de-duplication of the pre-training corpus can lead to demonstrably weaker memorization of PII by language models. AI researchers also investigate the trade-off landscape of AI capabilities. For instance, fairness and adversarial robustness are both desirable properties for AI systems, but there's a trade-off between the two using existing approaches.

Public Policy scholars explore how science, technology, and innovation interact with society, shape the economic landscape, and benefit national interests. Furthermore, they examine how to propose, design, and evaluate policies to encourage and regulate R&D in relevant areas. It's worth noting that while these topics may sound familiar to CS researchers who work in the intersection of AI and society, in Policy circles, these topics are studied and argued more rigorously. For instance, the notion of 'Public Value' of innovation is formally taxonomized and argued, and also distinguished from the notion of 'Private Value.'5 Such discussions also include whether or not the private sector should also oblige by instrumenting the realization of public values.

Naturally, the collaborative intersection of Policy and AI would enable the mixing of this expertise. Such collaborations can facilitate the integration of these distinct areas of expertise.

• AI researchers can benefit from the insights and perspectives of Public Policy scholars on how AI can impact society, and provide inputs on how to design and evaluate policies that encourage the development of beneficial AI technologies.

• On the other hand, Policy scholars can benefit from the technical expertise of AI researchers to better understand the capabilities and limitations of AI, and to design policies around the use of AI technologies and its impact on society that are informed by the latest AI research.

• By working together, AI and Policy scholars can help ensure that AI is developed and used in a way that maximizes its potential benefits while minimizing its risks and negative impacts.

How to collaborate with Public Policy researchers?

My perspective on this is somewhat limited as I have only explored the possibilities of these collaborations as a doctoral student. Hopefully, my experience will grow with time.

For CS/AI students, taking courses in and around Public Policy could be a great start. This is also a good way to get formally introduced to topics like how science is funded, how policies are proposed (what goes into drafting a policy memo), and how policies are analyzed and evaluated.

Collaborating with scholars in Public Policy via research projects is also a good way to get started. This is possible because a good amount of Policy research adopts similar, if not the same, language processing, text mining, machine learning, or statistical techniques. For instance, in her recent work, Prof. Diana Hicks and collaborators used BERT-based language models to show that adults are motivated to seek out the most credible sources, engage with challenging material like National Academies reports, advocating for open access. Understanding AI methods, their applicability, and their limitations gives AI researchers to bring something to the table as collaborators. Engaging in the broader project and joining conversations with Policy scholars leads to knowledge spillovers that result in understanding the know-how of the field.

Bottomline: Collaboration between CS/AI researchers and Public Policy scholars is crucial for developing responsible and effective policies that can harness the potential of AI to address real-world challenges. This can be facilitated by inviting Public Policy scholars to give talks at CS conferences, working together on research projects to gain a deeper understanding of the policy implications of our work, and actively engaging with policy frameworks to ensure that our research is responsible and addresses the broader societal implications of AI.

The context behind this article: In 2022, I started to pursue a minor in Public Policy alongside my primary research in AI for Good. I am working with Philip Shapira, Barbara Ribeiro, and Sergio Pelaez - Public Policy scholars with tremendous experience in the field. This piece is my reflection on what I have learned over the last few months and why I think it is important for more AI scholars to work with Policy scholars.

Footnotes:

1 Here is the code in case you are interested (disclaimer: it's in MATLAB!): GitHub [go up ↑]

2 I think I can beat it - GPT-4 cannot knit language generation with my personal experience. Plus it can't generate multimodal content (yet)! :) [go up ↑]

3 Deconstructing this failure of ChatGPT will be a longer discussion, but there is something worth noting here: as Web search transitions to being "conversational," the language-related hallucinations could influence the quality and accuracy of presented results. [go up ↑]

4 Resources on how to write policy memos: Tips on writing a policy memo, Broad's Institute's guide on policy memos. Prof. Diana Hicks offers a course at Georgia Tech (PUBP 6401 - Sci, Tech, and Public Policy) that includes learning how to draft a policy memo. The specifics vary across countries; for instance, in the UK, Policy researchers would meet with MPs to share their insights. [go up ↑]

5 Check out Divali Legore and Christine Webster's literature review on AI and Public Values; and Ribeiro and Shapira (2023). [go up ↑]